Artificial Intelligence

Neuromorphic chip architecture points to faster, more energy-efficient AI: IBM North Pole

This paper explains that there is a strong need for designing energy-efficient AI computers. It describes a chip with a neural inspired architecture, IBM calls NorthPole, that achieves substantially higher performance, energy efficiency, and area efficiency compared with other comparable architectures.

Inspired by the organic brain and optimized for inorganic silicon, NorthPole is a neural inference architecture that blurs this boundary by eliminating off-chip memory, intertwining compute with memory on-chip, and appearing externally as an active memory chip. NorthPole is a low-precision, massively parallel, densely interconnected, energy-efficient, and spatial computing architecture with a co-optimized, high-utilization programming model.

The paper compares NorthPole with dozens of other AI chips including chips from Intel, Nvidia, Google, Qualcomm, Amazon, Applied Brain Research, and Baidu. Advanced AI chips use neuromorphic architectures. The moving and shuffling of data takes a lot of energy. In neuromorphic architectures the memory elements are intertwined with the processing elements at a very fine scale. This decentralized memory model along with high data parallelism are key factors for greater energy efficiency. NorthPole is about 5 times faster and more energy efficient than the Nvidia H100. For more details, check out this YouTube video from Anastasi In Tech.

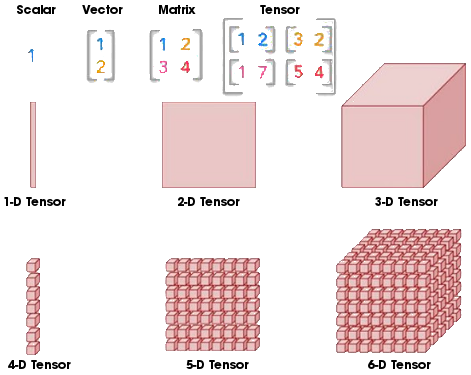

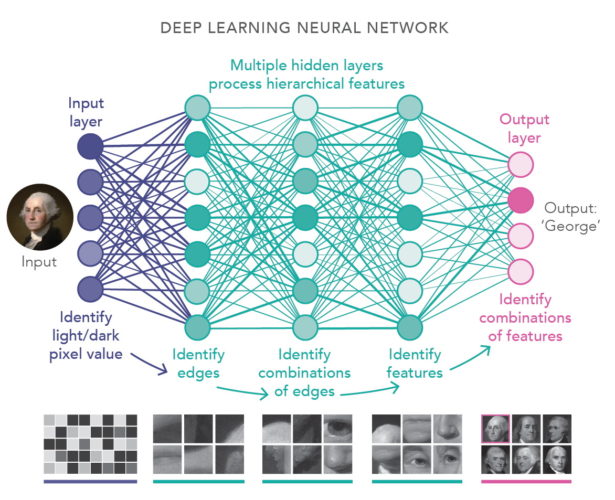

Data may be organized in a multidimensional array that is referred to as a "data tensor"; however in the strict mathematical sense, a tensor is a multilinear mapping over a set of domain vector spaces to a range vector space. Observations, such as images, movies, volumes, sounds, and relationships among words and concepts, stored in a data tensor array may be analyzed by artificial neural network tensor methods. Computations involve matrix representations of linear transformations, calculating the null space and range of linear transformations, and the rank of linear transformations. This linear algebra article shows the math in a simple example. Here is an image processing example:

Data may be organized in a multidimensional array that is referred to as a "data tensor"; however in the strict mathematical sense, a tensor is a multilinear mapping over a set of domain vector spaces to a range vector space. Observations, such as images, movies, volumes, sounds, and relationships among words and concepts, stored in a data tensor array may be analyzed by artificial neural network tensor methods. Computations involve matrix representations of linear transformations, calculating the null space and range of linear transformations, and the rank of linear transformations. This linear algebra article shows the math in a simple example. Here is an image processing example:

Since 2020, OpenAI has developed its generative artificial intelligence technologies on a massive supercomputer constructed by Microsoft, one of its largest backers, that uses 10,000 of Nvidia's graphics processing units (GPUs). An effort to develop its own AI chips would put OpenAI among a small group of large tech players such as Google and Amazon.com, that have sought to take control over designing the chips that are fundamental to their businesses.

It is not clear whether OpenAI will move ahead with a plan to build a custom chip. An acquisition of a chip company could speed the process of building OpenAI’s own chip - as it did for Amazon.com and its acquisition of Annapurna Labs in 2015.

Building an AI-Friendly Culture

- Practice active listening

- Emphasize the importance of your people

- Share the vision

AI and the Organizational Structure

- AI will automate operational tasks

- Report generation and project tracking can be automated with AI

- Flatter, team-based structures will drive innovation

Lessons in AI Implementation

- Walmart implemented AI across the business, from inventory management to customer service

- IKEA uses AI for routine customer inquires

- Bank of America uses AI to monitor transactions

- Start with the problem

- Avoid layering new tech onto old processses

- Involve end users early and often

- Genpact

AI and Your Workforce

- AI and employee retention

- AI and employee development

- Learning and Development Teams

- Personalized learning

- Recommend resources

- Automate evaluations

- Pair mentors

- AI and performance management

- Analyze multiple data sources

- Personalize performance plans

- Highlight individual contribution

- Uncover new metrics

- Streamline roles and responsibilities

- AI and team effectiveness

- AI augments our ability to work together and boosts communication

- Create transparancy and accountability

- Balance workloads

- Create positive teams

AI and Business Value

- AI and business differentiation

- Mitigate AI risks

- Data classification framework

- Use acronyms and mnumonics

- Use diverse datasets

- Implement data governance

- Proactive training

- Ethics and Fairness

- Ensure transparancy

- Test regularly

- Update models

- Audit

- Align with goals

- Prioritize privacy

- The right talent

- legal

- regulatory

- ethical

- Ensure transparancy

Leadership in the age of AI

Just finished the course “AI Challenges and Opportunities for Leadership” by Conor Grennan!

Gen AI - Building AI agents

Tuesday, May 21 - Thursday, June 13 2024

Generative AI: From Prototype to Monetization

- The Google model ecosystem: led by Gemini, our largest and most capable AI model incl.:

- What’s new in Gemini 1.5

- How to use larger context windows (up to 1 million tokens) effectively

- Multimodal use cases for businesses

- Function Calling in Gemini to connect to external systems

- More efficient training and serving, while increasing model performance

- Better understanding and reasoning across modalities

- Overview of AI on Google Cloud, including 1P and 3P foundation models: We will provide an overview of the AI services offered by Google Cloud, including foundation models from Google and third-party providers incl.

- Big partners and OSS projects in this space that our users are finding value with: LangChain, LlamaIndex, Chroma DB, Milvus, etc., and how to use them w/ Gemini

- Open and Partner models, including Llama, Claude 3, and more

- Limitations of generative AI: We will discuss the main limitations of generative AI, such as bias, frozen training data, and safety, and how we’re overcoming some of these limitations with larger context windows and function calling.

- Choosing the right model and approach: We’ll discuss best practices for using generative models, in terms of model selection and how to determine when to use off-the-shelf models vs. fine-tuned models.

- Protection with generative AI services: We’ll discuss our industry-first approach to indemnity for potential legal risks related to copyright infringement claims for both the training data used by Google and the outputs generated by customers

Deep dive into the Gen AI ecosystem

- What you’ll build: Chatbot for customer service that is grounded in your content and connects to your CRM, inventory, and support systems.

- Gen AI stack for developers: Model, tools & functions, orchestration, and deployment

- Backend and frontend: Backend development in Python and other SDKs vs. application development with GenKit

- Gen AI tools and patterns: Prompt tuning in Gemini, Retrieval Augmented Generation (RAG)

Building out code pipelines for your Gen AI customer service app

- Getting started with the Gemini model and API in Vertex AI

- Prompt design and prompt tuning

- Generation configuration settings

- Overview of different modalities in Gemini

- Building an initial RAG implementation grounded in your data

- Working with multimodal data: images, PDFs, audio, video

- Best practices for accelerating prototyping and development

- Prototyping a user interface with Python web frameworks

Defining user journeys for your Gen AI customer service app

- Designing the right user experience for your chatbot

- Review key conversation design principles

- Stub out user experience

- Define user flows

- Implement a frontend layer (GenKit, Firebase)

- Start testing inputs/outputs/eval

Deep dive into connecting Gen AI models to the real world and agent workflows

- Overview of agents and relevant use cases

- Explore connections to your databases, CRM, support system, and other external systems

- Different ways to implement grounding

- Gemini Function Calling to connect to external APIs

- Building RAGs on vector DBs, APIs, and YouTube

- Deep dive on tools and function calling

- Deep dive on orchestration (LangChain)

- Talk through patterns for connecting to external systems

Deep dive into quality, evaluations, responsible AI, and safety

- Improving response quality and accuracy

- Evaluation metrics and measures of success

- Deep dive on user experiences beyond chatbots

- Deep dive on evaluation, responsible AI, and safety

Bringing it all together with orchestration, productionization, and deployment

- Walk through options for evaluation and monitoring

- Discuss productionization and deployment options

- Deploy your application to Vertex AI's Reasoning Engine for a secure, scalable, and managed runtime environment

- Pattern and approaches for custom orchestration

- Common pitfalls and challenges of orchestration